I already described in a previous post why databases are different to applications from a development perspective. The most impactful difference in my opinion is, that you can deploy applications by state. You pick a certain point in your version control system, build your application and have a certain state of your application at hand.

Best case, this state is backed by a number of self-tests and has a unique version number (well, it has one by the hash of your specific commit). You take that build and can deploy it, no matter if your customer had a different version (state) of your application installed or not.

Of course this is simplified, there might be external APIs, libraries, configuration files or – who had thought – databases which prevent you from easily switching application states, but normally such constraints are relatively few.

Databases are different. You can’t preserve state in a database once it’s shipped to a customer. The moment you give it out of your hands, a database starts to change its state continuously. You cannot simply “install” a new version of your database schema on a customer database – there’s data to be preserved and in most cases it’s not even possible to clearly separate schema and data.

And yes, data changes for applications, too, but in my experience database schema is usually much tighter coupled to the data than applications are (which makes sense, doesn’t it?).

So, how do we overcome these difficulties, especially if we are aiming towards frequent releases and agile, collaborative development?

State-based deployment via Schema compare

The basic idea of this approach is that you develop on a separate database and this database is the primary “holder of truth”. You can decide when this primary database is in a state suitable to be the next release.

This state can be saved via doing a full backup for example and once you want to deploy it you do a schema compare (depending on your solution even data compare) between your primary database and the customer’s database.

There are several commercial solutions which try to support you in this way of deployment, ranging from quite cheap to pretty expensive ones. RedGate has a whole suite around that approach, DbForge comes with some functions in their enterprise products and even the SQL Server Data Tools (SSDT) for Visual Studio encourages developers to “publish” their current state into a database, scripting the changes found via direct compare.

The results of these compare functions are very different in quality, so if you really want to go that route (and I will show you why you shouldn’t), go with the expensive tools. The risk to destroy your target database, lose data or introduce unwanted side-effects with running such an automatically created script is – in my experience – very high.

Confusion in the Sith Archives

You are back on the Deathstar and your Sith-Lord in charge calls for your database expertise. Although the will for order is not very spread amongst sith, this special commander wants you to make some modifications to a database holding information about the most powerful sith of all times.

The person who once created solution was not very skilled, didn’t know anything about data normalization and you now look at a table like this:

| ID | Name | Alive |

|---|---|---|

| 1 | Sidious, Darth | 0 |

| 2 | Kun, Exar | 0 |

| 3 | Vader, Darth | 1 |

Your sith-commander wants you to improve the table so it’s easier to search for specific names, therefore he wants a new column: “PRENAME” (for simplicity he agrees that “Darth” may be used as Prename, too). “NAME” should only hold the surname in future.

Should be a pretty easy task, shouldn’t it? You immediately start on your main development database by doing an ALTER TABLE statement, adding a new “PRENAME” column. Afterwards you do a simple update statement like this:

update powerful_sith SET PRENAME = trim(substr(NAME, instr(NAME, ',')+1)), NAME = trim(substr(NAME, 0, instr(NAME, ',')))

(Yes, it would be a good idea to introduce another column “SURNAME” and save the second part of the name into that column, leaving “NAME” untouched first. Then in a second step you should check whether SURNAME || ‘, ‘ || PRENAME equals NAME so you can be sure nothing went wrong before finally deleting NAME and renaming SURNAME, but I wanted to keep the main example as simple as possible)

Now you have the preferred state in your main development database and can go on doing a backup and a schema compare for deployment into production database. Shouldn’t be too hard, should it?

Even if your tool of choice is able to issue the first change (table schema) in a useful way (and that’s not sure – I saw pretty strange and even terribly destructive approaches done by automatic comparison tools when you add or remove table columns), it won’t be able to do the second correctly, because how should your tool know the business logic behind the data?

It will require you to run some migration scripts after the script done by schema-compare or weaving that specific updates into the automatically created script. I expect there to be much headache and great confusion.

Migration-based database development

There is a different approach to database development and deployment which honors the different nature of databases: You always work with “migrations” or “transitions”. Instead of saving a certain state of your database, you save all the steps needed to get there.

This means that you have to script every change you are doing to your database and store these scripts in a manner that you can (re-)apply them to any target-database, leading to the same behavioural and functional state (but without changing things specific to a customer).

It means that you might have to change the way you are currently working – especially if you use a visual IDE to change schema objects. Every change you are doing has to be scripted.

In the end you are doing a similar thing as a schema-compare would do, you just do it in a much more controlled and safe way. And you do it right while you are developing, so you know all the business logic and caveats.

But isn’t that a terrible decrease of speed and productivity? Doesn’t that sound like tedious work (most developers hate tedious work more than anything else – myself included)?

It might seem so at the first glance, but let’s look at the benefits:

- It enables a rock-solid deployment process because you are doing exactly the same when updating your local database, your development database, your QA database, your acceptance database and your production database. It’s always the same process and it can be automated.

- You can easily bring a (CI-)database to the point you want by loading a baseline backup and running all migration scripts until a certain point.

- If you do it right you have database versioning and change documentation included

- The approach encourages small changes at a time, leading to less risky deployments

- It enables and empowers continuous integration because you can easily transport your functional stat to different data sets (e.g. test data)

- You know exactly what’s happening. That’s in my opinion the greatest benefit of all, because it gives you confidence that what you’re delivering will work. It also gives you enormous flexibility and lets you solve any kind of challenge – even and especially ones which need specific business logic.

It’s not fluffy unicorn magic

Scripting all your database changes into a bunch of files won’t suddenly free you from all problems and it won’t free you from using your brain either. In fact, having some scripted files flying around without a good process and some tools in the mix will make things even worse.

I met a guy who scripted all of his database changes, organized them in large folder structures, had a strict naming convention and rules who had to put the files in which folder – and when he deployed them he run them by hand. Often more than a hundred files. In the correct order. By hand. Sometimes late in the night because of the “We need to release right now” pressure.

I myself could not guarantee to run hundreds of SQL files, organized in different folders by hand without making a mistake. In fact, I doubt he could. And some customer issues raised after the deployments support my doubts.

So to do migration based database devlopment right, you have to put some things around your scripts – which come with their own benefits.

Automated migration

Maybe the most important thing you want to have when using migration scripts is some tool which automates their deployment. You want to have some files like that

migrations/

1.0/

1.0.1_alter_table_powerful_sith.sql

1.0.2_update_table_powerful_sith_split_name.sql

1.1/

1.1.1_add_view_V_POWERFUL_SITH.sql

and a tool which applies them in the right order. But that’s not all, you want a tool which keeps track of which migration script is already run against your target database, when it was run and maybe who run it.

There are dozens of free tools available for database migration, unfortunately many of them (e.g. liquibase) use some kind of DSL (domain specific language) and won’t allow you to use plain SQL for your migrations.

This might be a nice idea when you come from the abstraction layer of application development (wouldn’t it be nice to have some DSL which allows us to run our – simple – migrations on any database?).

It is totally insufficient if you are developing for a specific database like Oracle. You are the hero of SQL, PL/SQL or TSQL and want to use your superpowers in migration scripts.

Therefore my favourite is flyway.

It brings all the security, automation, flexibility and simplicity I’d expect from such a tool and allows you to work with plain, vendor-specific SQL scripts. It’s Open Source, written in java and if its out-of-the-box-features are not flexible enough there are several possibilities to easily expand it by hooks.

(If you are more into .NET you might want to give Evolve a try, a .NET implementation heavily inspired by flyway).

Versioning your database

Wouldn’t it be great to always know exactly which version/state your database schema has? Wouldn’t it be great to have some documentation about what’s been changed?

If you are using flyway or a similiar tool, that’s right built-in. And it’s automated.

The same guy I mentioned earlier came up with a concept for documenting features right in the database after I told him about how such things are handled by migration tools like flyway. The functionality/table design he implemented included everything you’d want: description of a feature, version number, who was responsible for it, which scripts belong to it etc.

The only problem was: You had to fill-in that documentation by hand (or script it by hand into the migration scripts). There was no automation around it at all. So after a view weeks we couldn’t rely on the information anymore because we couldn’t be sure that everyone really always put in the correct data when doing a migration. It was a sad example of good intentions done terribly wrong.

If you want things to be reliable, automate them. Machines are so much better in following rules than humans.

Idempotent migration scripts

In theory you shouldn’t run migration scripts more than once. In a perfect world you would always apply each migration script exactly once – and then go on with the next.

In reality I experienced it to be very good practice to write your migration scripts idempotent. That means that a migration script can be run several times, always leading to the same output.

For example if you are about to alter a table you can script that with the ALTER TABLE statement. That wouldn’t be idempotent because the second time you run it, it would raise an exception (because the new column or already exists).

If you write your migration script like this:

declare

v_count integer;

begin

select count(*) into v_count from user_columns where table_name ='POWERFUL_SITH' and column_name ='PRENAME';

if ( v_count <= 0 ) then

execute immediate 'alter table POWERFUL_SITH add PRENAME varchar2(100)';

else

dbms_output.put_line('POWERFUL_SITH.PRENAME already exists');

end if;

end;

/

you are able to run it again and again, always leading to the same output: A table with the column PRENAME.

In my opinion you should always try to do your scripts as idempotent as possible. It will make your migrations more error-prone and it will be easy to extract a certain migration as hotfix for a production database. It will also make development easier.

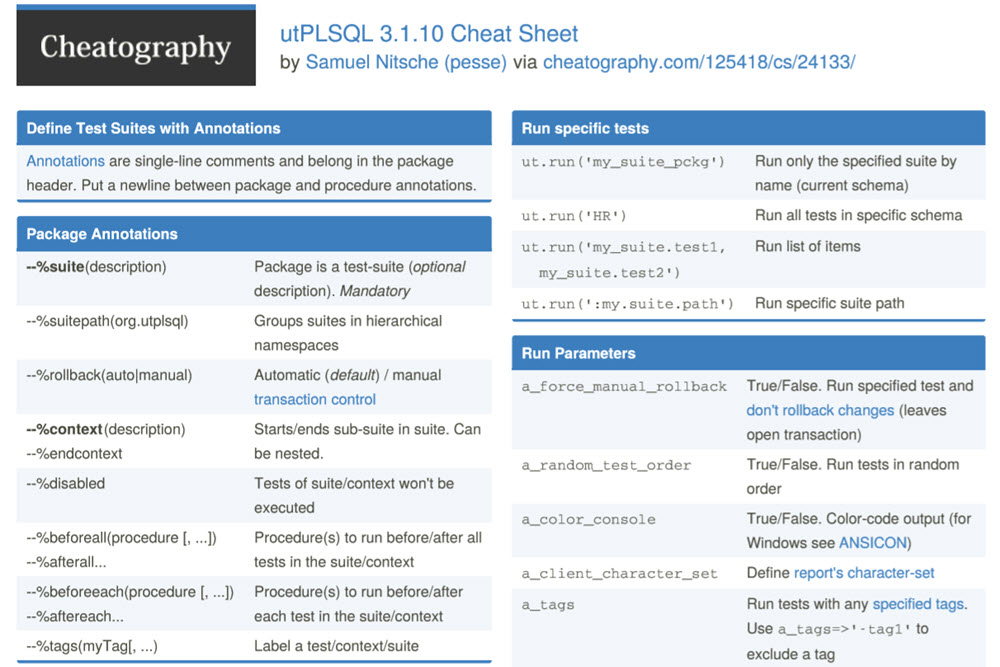

Migration testing

When I started to dive into the topic of database self-testing I stumbled over a blog post which described how you could also test the outcomes of your migration scripts and roll back with an error when something unexpected happened. I found it quite interesting and tried to include it into some of my migration scripts.

While I still think the idea is charming, at least for oracle development it doesn’t feel fitting. This is primary to the reason that you can’t roll back DDL in oracle (I know there are some ways in Oracle 12c, but overall it’s way too complicated and doesn’t work as painless as in SQL Server). I still include it for some complicated data migrations once in a while.

What you can and should do instead is adding a full run of your (hopefully existant) self-testing suite after every migration. I did so with a simple powershell script on my local machine and making it harder for me to not do a full test-run supported me in developing a healthy testing-habit.

Conclusion

I did not manage to include all topics related to migration-based database development in this article, so you can expect more to come in future posts.

I myself shifted some time ago and I didn’t regret it. I think it’s an approach which greatly enables and empowers agile, confident database development and encourages you to embrace change even with monolithic systems like databases.

I highly recommend everyone to read the excellent article of Martin Fowler and Pramod Sadalage on evolutionary database design – which levels this concept one (or two) step further. It was also my initial inspiration to change the way I do database development.

Let me know what you think about it and what your experiences are with migration-based database development (you can also do so via twitter)

4 Comments

Ben Einhorn · November 29, 2017 at 2:49 am

I’d probably add a check wether an existing column named PRENAME fits the requirements, just to make sure it is alright. In more complex operations those things tend to get overlooked a lot, also knowing some so-called developers and their habits it just seems a good idea.

Pesse · November 29, 2017 at 2:54 am

You’re right, in fact I have a snippet for those things in my daily work which also checks for correct type of column. Thanks for the hint!

jon49 · April 4, 2018 at 5:11 pm

> If you want things to be reliable, automate them. Machines are so much better in following rules than humans.

Isn’t this what SQL Server Data Tools does for you? It saves each migration too. So you have a history of them. It let’s you know if you need to refresh views, etc. It gives you static typing, so when you make a change it will tell you what other views/stored procs/functions you have broken and need to fix before you release the update. It’s not perfect, but it sure does help. After you have it generate the migration script for you you can look over it make sure it is what you expected and then test it on your dev database. It doesn’t take the place of your unit tests, but it sure does give you an added boost of confidence. Don’t get me wrong there are some things that it still gets wrong, but with time I would imagine that would be fixed.

Pesse · April 5, 2018 at 2:39 pm

I have very bad experiences with SSDT in Visual Studio, though I have to say I’m no expert with Visual Studio. I at least witnessed the following things happening when migration scripts created by SSDT were used without reviewing them very carefully:

– Wrong order of dependent objects

– Identity-columns getting messed up

– Unintended data change on alter table

The migration scripts created also look very ugly, verbose and hard to read to me, but that’s a matter of taste. Also, you can’t solve the data problem as shown in the example with SSDT migration scripts – only schema is covered as far as I know.

For me, SSDT is a bit like FrontPage in HTML4-times: You could do pretty nice things but you were in terrible trouble if you used it without knowing the basics. I would not rely on the auto-migration scripts if you are not already used to work with migration scripts. I would always recommend to start with a manual approach, get a good basic understanding of the techniques and challenges and go on from there with more automation.